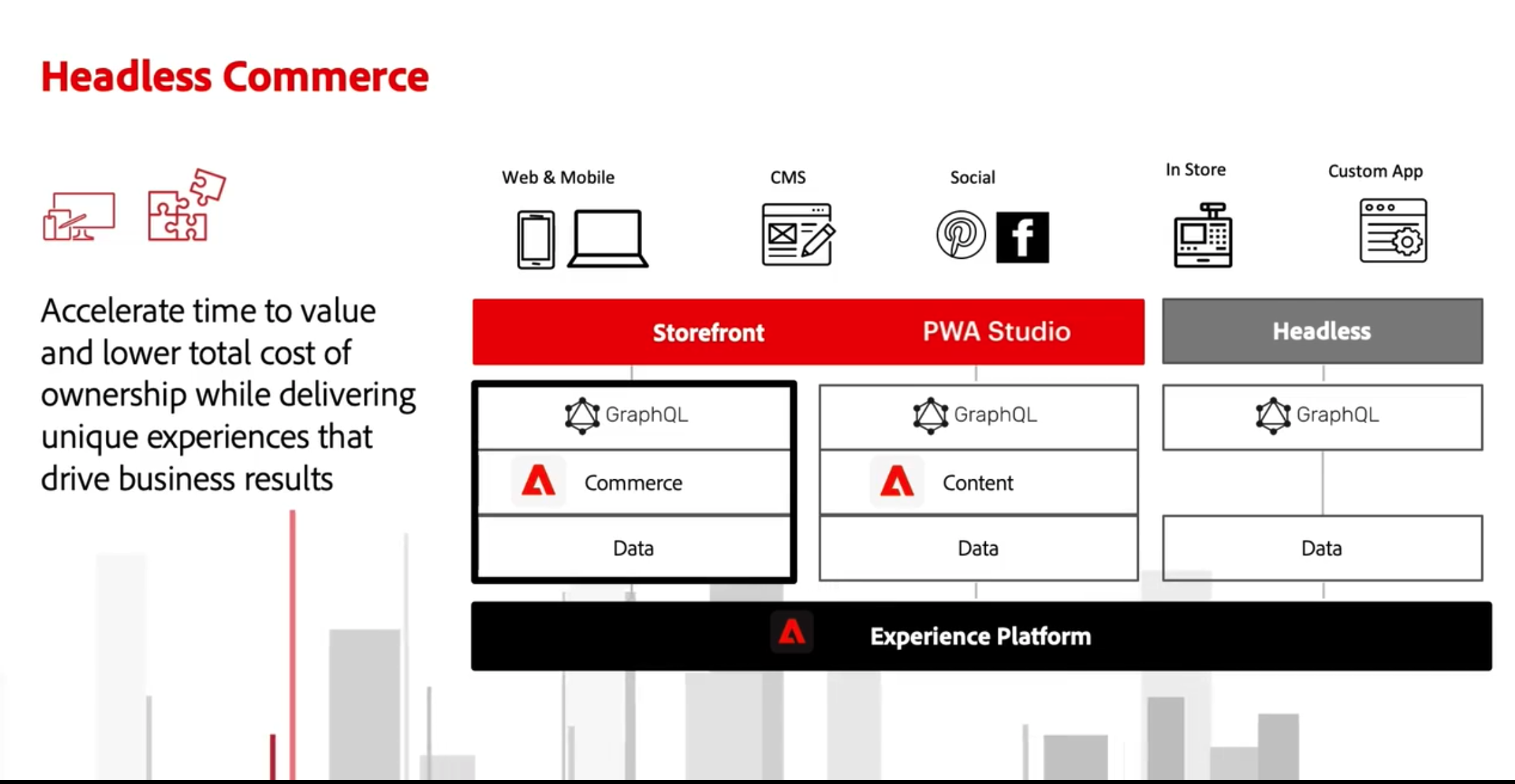

As Magento ventures further down the path into a more integrated solution as part of the Adobe family we are seeing more and more graphics like the below:

Indicating we can easily mix and match Commerce with other offerings. Combine functionality for different needs as required. And of course Headless as the buzzword underpinning this trend. One of the things easily glossed over in the neat looking graphics is the underlying infrastructure that delivers everything.

With more and more different channels offered it becomes increasingly unlikely that everything will be available through the same domain. So while in the past we might have had a very monolithic looking application sitting on one domain providing everything: example.com. In a headless reality we will have multiple domains and servers involved, likely delivered and used by different teams across different parts of the organisation. So we can easily imagine something like the following:

web.example.com

instore-kiosk.example.com

popup-store.example.com

salesteam.example.com

all connecting to the same graphql backend available on backend.example.com.

Unfortunately browsers really don't like making any requests from one domain to another (cross domain requests) in their attempts to make the internet a safer place.

How does Adobe currently deal with this?

To my knowledge when using Adobe Commerce Cloud they currently (mid 2021) only support a single domain when using PWA Studio sidestepping this issue completely.

The other solution provided by Adobe is part of their PWA Studio upward.js package (it's not available on Commerce Cloud.). It includes a proxy mechanism to again neatly sidestep the need to deal with cross domain requests.

The below is an overview of how a request for a graphql resource would get handled:

- browser sends request to frontend.example.com/graphql

- frontend (a node application) via upward.js proxies request to backend.example.com/graphql

- backend receives request on full page cache entrypoint (varnish)

- decides if request can be fullfilled from cache, if yes proceed to 8

- request is forwarded to webserver (nginx/apache)

- request is forwarded to Magento 2 application (php)

- Magento 2 generates response

- response is sent to frontend.example.com

- response is sent to browser

While this works well I can't but think that having an additional layer proxying the request will always be adding some latency (as well as complexity). We should be able to use the following domain set up:

frontend.example.com (hosts a frontend to a browser using any framework interacting via graphql with the back-end - could be PWA Studio but doesn't have to be)

backend.example.com (we are mostly interested in the /graphql endpoint)

with a shortened path to the response looking like this:

- browser sends request to backend.example.com/graphql

- backend receives request on full page cache entrypoint (varnish)

- decides if request can be fullfilled from cache, if yes proceed to 7

- request is forwarded to webserver (nginx/apache)

- request is forwarded to Magento 2 application (php)

- Magento 2 generates response

- response is sent to browser

We also want the response to be as fast as possible. According to the DevDocs here a few queries should be coming from the full page cache. Let's pick an urlResolver query to give us an example that should be able to be delivered quickly from the full page cache:

query: query ResolveURL($url:String!){urlResolver(url:$url){id relative_url redirectCode type __typename}}

operationName: ResolveURL

variables: {"url":"/"}However we also need to add the store code to the request as a custom header store: default. See the current overview of request headers here in the devdocs.

To illustrate some of the repercussions these custom headers have let's follow the same example query. To understand what happens next we need to take a look at Cross Origin Resource Sharing. The following is a great resource on explaining Cross-Origin Resource Sharing (CORS). The part we are interested in is the explanation what constitutes a simple request and when it becomes complex.

Comparing our request against the given criteria:

- It's either a GET or POST request ✅

- Only CORS safelisted headers are used (Accept, Accept-Language, Content-Language, Content-Type) ❌

- Content-Type is one of the accepted values (application/x-www-form-urlencoded, multipart/form-data, text/plain) ❓

We'll get back to the Content-Type later but the Store custom header forces the browser to upgrade the request to a complex one. Before a complex request can be executed the browser will send what is called a preflight request. Essentially checking with the server if it will be okay to send the complex request next. This preflight request is an additional request to the server using an OPTIONS request using an ORIGIN header and a range of CORS specific headers to indicate to the server the shape of the complex request to come. If the server is going to allow the request it will respond with a 204 response and again including some additonal CORS specific headers.

Out of the box Magento 2 does not handle those requests. For the moment the Graycore CORS M2 extension looks to be the most complete solution to add CORS support to Magento 2.

But even with Magento 2 configured to respond to CORS specific requests we still have double the network activity. To make matters worse these OPTIONS request end up going through to the Magento 2 application as the default Varnish configuration only handles GET and HEAD requests

# We only deal with GET and HEAD by default

if (req.method != "GET" && req.method != "HEAD") {

return (pass);

}see here.

Where to from here?

I believe we can do better in making sure that we can avoid most of this complexity.

I can see the original appeal in using a custom header on the GraphQl endpoint to provide additional ways to segment the response. But knowing how CORS works there are other ways to achieve the same outcome. For example the following patches against magento/module-store-graph-ql and magento/module-directory-graph-ql respectively

--- Controller/HttpRequestValidator/StoreValidator.php 2020-09-23 20:00:54.000000000 +1200

+++ Controller/HttpRequestValidator/StoreValidator.php 2021-02-01 20:36:00.000000000 +1300

@@ -40,7 +40,7 @@

*/

public function validate(HttpRequestInterface $request): void

{

- $headerValue = $request->getHeader('Store');

+ $headerValue = $request->getParam('Store')?$request->getParam('Store'):$request->getHeader('Store');

if (!empty($headerValue)) {

$storeCode = trim($headerValue);

if (!$this->isStoreActive($storeCode)) {--- Controller/HttpRequestValidator/CurrencyValidator.php 2021-02-01 20:33:33.000000000 +1300

+++ Controller/HttpRequestValidator/CurrencyValidator.php 2021-02-01 20:33:20.000000000 +1300

@@ -41,7 +41,7 @@

public function validate(HttpRequestInterface $request): void

{

try {

- $headerValue = $request->getHeader('Content-Currency');

+ $headerValue = $request->getParam('Content-Currency')?$request->getParam('Content-Currency'):$request->getHeader('Content-Currency');

if (!empty($headerValue)) {

$headerCurrency = strtoupper(ltrim(rtrim($headerValue)));

/** @var \Magento\Store\Model\Store $currentStore */"downgrade" the custom header to a parameter on the request. No preflight needed.

The above patches are nice in the sense that they are backwards compatible until a more GraphQl conform solution can be added. Or maybe the graphql entrypoint url needs to change based on the store - so for example backend.example.com/storecode/graphql.

Sidenote: Content-type and PWA Studio

When using PWA Studio Apollo Client is used to connect to the graphql endpoint. The default content type is application/json which makes sense as the default request is a POST request with the payload being json. But PWA Studio uses the setting customFetchToShrinkQuery which converts all non-mutation requests into GET queries (to allow Varnish to serve the request). As part of this the payload gets converted to be part of the requested url to aid cacheability on the back-end. Unfortunately for us the content-type describing the payload is still at application/json even though the request body is empty. To address this we need to modify the shringGETQuery function PWA Studio uses to adust the content-type header used for the fetch request

options.headers = {

'content-type': 'text/plain'

};

return fetch(url, options);